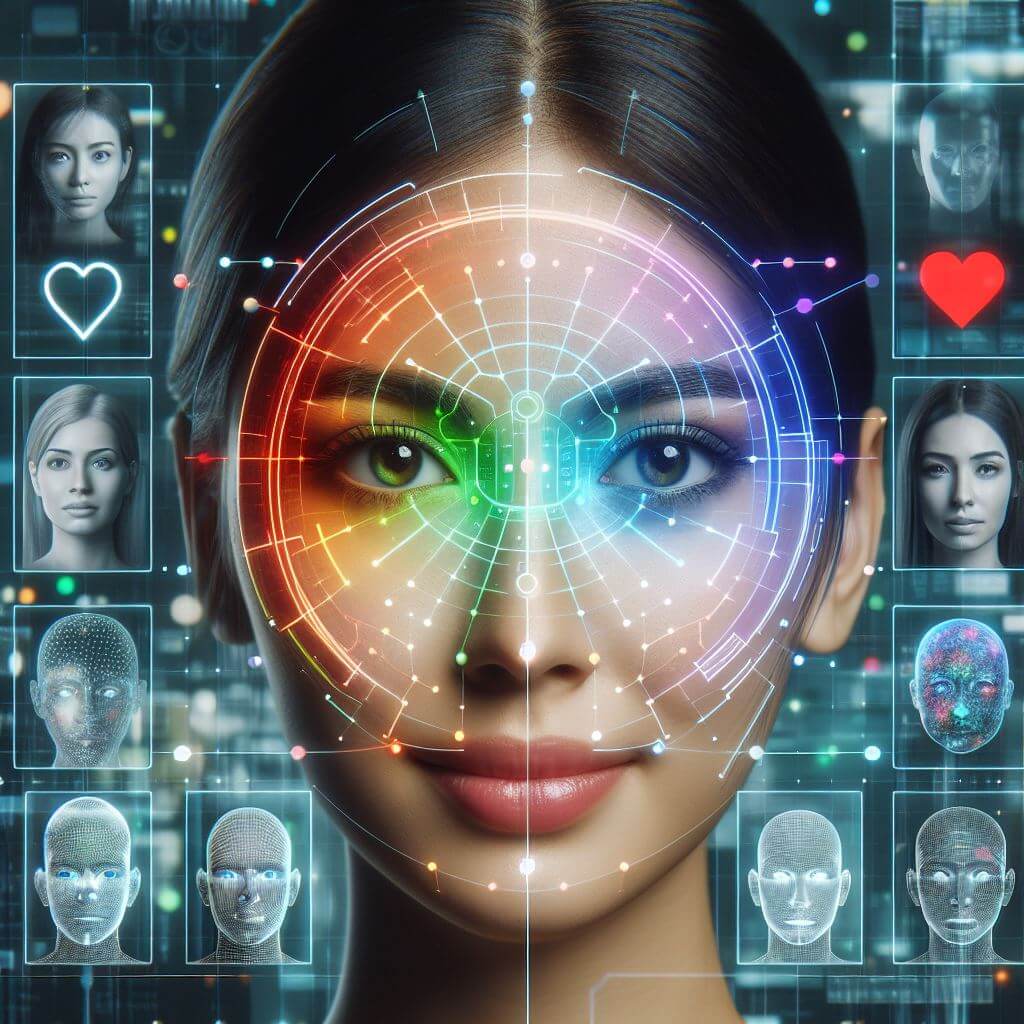

Performance computing is an intriguing field at the intersection of computer science, psychology, and cognitive science. Its main goal is to develop systems and devices that can recognize, interpret, and process human emotions. The significance of Affective Computing lies in its ability to bridge the emotional gap between the cold precision of machines and the warm complexity of human feelings, facilitating a new level of interaction between humans and technology.

The Role Of Emotion Recognition

Emotion recognition is a key component in the vast field of performance computing, firmly entrenching its importance in the quest to make technology more responsive and interactive. This process goes beyond simple technical achievements, opening the door to an era where devices not only understand commands but also perceive the emotional context behind them. Emotion recognition delves deep into the diverse range of human emotions, using machine learning to decipher subtle cues from voice tones, facial expressions, and even body language. Each of these elements offers a unique window into a person’s emotional state, serving as a rich source of data for analysis.

The journey begins with the careful collection of emotional data, which is then subjected to sophisticated machine-learning algorithms designed to accurately classify those emotions. This task is by no means simple, as emotions are inherently complex and can manifest in a multitude of expressions that differ not only from one person to another but also in different social and cultural environments. For example, a smile may traditionally signify happiness, but its interpretation can change dramatically depending on the context, such as a polite smile masking discomfort or disagreement. Similarly, vocal tones that convey annoyance in one cultural context may be interpreted as passionate involvement in another.

The journey begins with the careful collection of emotional data, which is then subjected to sophisticated machine-learning algorithms designed to accurately classify those emotions. This task is by no means simple, as emotions are inherently complex and can manifest in a multitude of expressions that differ not only from one person to another but also in different social and cultural environments. For example, a smile may traditionally signify happiness, but its interpretation can change dramatically depending on the context, such as a polite smile masking discomfort or disagreement. Similarly, vocal tones that convey annoyance in one cultural context may be interpreted as passionate involvement in another.

Thus, machine learning algorithms must be trained on diverse data sets that encapsulate this wide range of emotional expressions. They use techniques that analyze these data sets, learning to associate specific patterns in the data with corresponding emotional states. This requires that algorithms not only recognize the obvious signs of emotion but also discern the subtleties and complexities that underlie human feelings.

In addition, the effectiveness of emotion recognition is greatly enhanced by the continuous evolution of machine learning models that become more sophisticated with each iteration. These models are increasingly able to cope with the variability and ambiguity inherent in human emotions, paving the way for more precise and nuanced emotional understanding by machines. The ultimate goal of these efforts is to facilitate more intuitive and meaningful interactions between humans and technology, where machines can accurately respond not only to explicit commands but also to implicit emotional cues embedded within them, thus improving the overall user experience and creating a holistic human-machine interface.

Contribution of Machine Learning

Machine learning is a cornerstone of performance computing, catalyzing significant advances in the industry’s ability to recognize and interpret human emotion. This branch of artificial intelligence differs from traditional computing approaches by prioritizing data-driven learning over explicit programming, allowing machines to adapt and evolve based on the information they process. This methodology is particularly favorable for emotion recognition, a field characterized by the complexity and subtlety of the subject – human emotions.

At the core of machine learning’s contribution to efficient computing is its ability to recognize patterns. Using algorithms and neural network models, machine learning systems can sift through vast datasets of emotional expressions—be they facial expressions, vocal nuances, or physiological signals—identifying complex patterns that may elude observers. This ability to recognize patterns is critical given the variety and subtlety of emotional displays. For example, slight deviations in facial movements or vocal intonations can significantly alter implicit emotions, nuances that machine learning models can learn to detect with remarkable accuracy.

Deep learning, a subset of machine learning, extends this capability even further by using multi-layer neural networks to process vast amounts of data and extract features without human intervention. This is particularly useful for emotion recognition when the input data can be very voluminous (such as video frames of facial expressions or complex vocal patterns). Deep learning models thrive on this complexity, able to infer subtle emotional states from data that may be too subtle for simpler machine learning approaches or human analysis.

The iterative nature of machine learning models means that with each additional piece of data processed, the system can improve its understanding and improve its accuracy. This learning process is critical for systems that recognize emotions because it allows them to adapt to the unique ways in which people express emotions. It also allows these systems to continually evolve, staying relevant as social norms and expressions of emotion change over time.

Prospects and Challenges

The horizons of affective computing, illuminated by the prospect of enhanced emotion recognition capabilities, beckon to a future where technology understands human emotions and responds to them with unprecedented depth and sensitivity. This vision is driving innovation across multiple sectors, envisioning applications that range from enhancing user experience in digital interfaces to transformative roles in healthcare, education, and entertainment. For example, imagine educational software that measures a student’s frustration or boredom and adapts its teaching style in response, or medical bots that offer preliminary mental health assessments by analyzing patient expressions and speech patterns. Such applications highlight the profound impact that emotion-aware technology can have on societal well-being and individual service delivery.

The path to realizing the full potential of efficient computing is strewn with formidable problems that go beyond the technical to the ethical and socio-cultural spheres. The complex nature of human emotions is itself a major obstacle; emotions are not always expressed or experienced in the same way. Cultural nuances affect how emotions are expressed and perceived, requiring systems to be not only highly accurate but also culturally sensitive and adaptive. Furthermore, the subjective experience of emotions means that a one-size-fits-all approach is implausible. Individual differences in emotional expression require highly personalized systems capable of learning and adapting to each user’s unique emotional landscape.

Another urgent challenge is the preservation of privacy and ethical standards among the technological possibilities of emotion interpretation. The collection and analysis of emotional data rests on delicate grounds regarding an individual’s rights to privacy and the potential for misuse. Ensuring that such technologies operate within a framework that prioritizes consent, transparency, and security is paramount. There is also the risk of using emotional data in manipulative advertising or as surveillance tools, which raises ethical concerns about the extent and manner of their use.

The accuracy and determination of emotion recognition technology are under constant control. Although advances in machine learning and data processing have markedly improved performance, potential misinterpretations remain, with significant implications for applications in sensitive areas such as mental health assessment or safety. Thus, ensuring the reliability of emotion recognition technology remains a critical ongoing challenge for researchers and developers.