Predictive modeling has become an indispensable tool in a myriad of fields encompassing everything from finance to healthcare. At the heart of this analytical revolution is machine learning, a subset of artificial intelligence that uses algorithms to parse data, learn from it, and then make a determination or prediction about something in the world. One such algorithm that has garnered the attention of experts is the Random Forest algorithm.

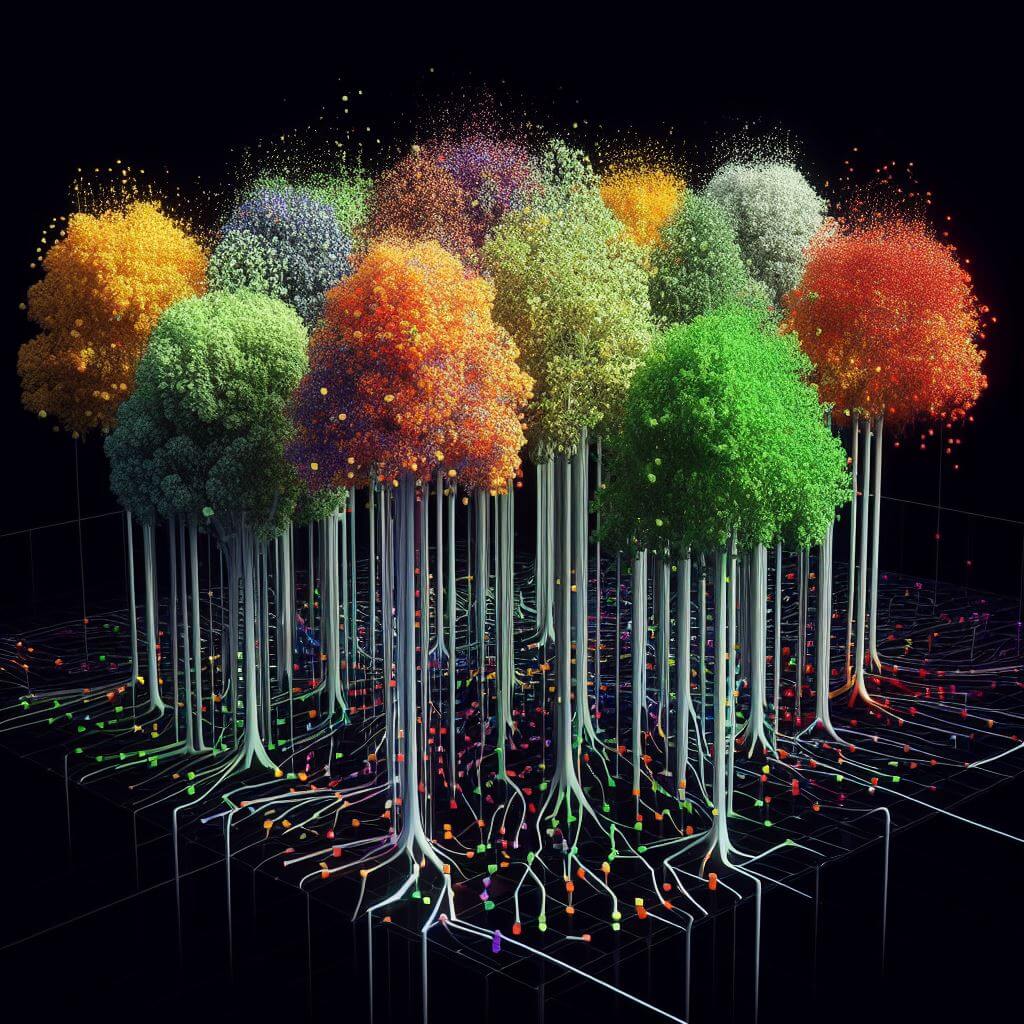

The Random Forest algorithm is the construction of numerous decision trees during the training process. Imagine a forest where each tree is an individual model contributing its vote towards the ultimate prediction. In the context of Random Forest, decision trees function as the base learners of the ensemble technique. Typically, a single decision tree is built by splitting the dataset into branches based on certain decision criteria that result from the features within the data. These criteria are chosen to create the most distinct branches that split the dataset into the cleanest possible subsets according to the target variable.

Random Forest enhances this process by building many trees where each tree is grown on a random subset of the data and a random subset of the features at each split. This randomness ensures that the high variance of individual decision trees (a tendency of trees to overfit the data on which they are trained) is countered by the collective decision-making of the forest as a whole. Individual decision biases are washed out and what emerges is a more generalizable and robust predictive model.

Random Forest enhances this process by building many trees where each tree is grown on a random subset of the data and a random subset of the features at each split. This randomness ensures that the high variance of individual decision trees (a tendency of trees to overfit the data on which they are trained) is countered by the collective decision-making of the forest as a whole. Individual decision biases are washed out and what emerges is a more generalizable and robust predictive model.

The technique of Bootstrap Aggregating, or bagging, is fundamental to Random Forests. What bagging does is create multiple subsets of the original dataset with replacement—meaning the same data point can appear more than once in a given subset—thus creating different training sets for our trees. Each tree sees a slightly different sample of the data, which means it learns different patterns. When combined, these patterns offer a comprehensive understanding across multiple perspectives of the data.

When constructing the trees, only a subset of features is considered for each split. This ensures that the trees in the forest are not overly correlated with one another. If trees were to consider all the features, they would likely make similar decisions at each level, leading to similar trees that share the same errors. By limiting the features at each decision point, Random Forests ensure that the trees diverge in their pathways, capturing unique aspects of the data missed by others.

Another aspect of the Random Forest algorithm that contributes to accuracy and robustness is the way it combines the trees’ predictions. For classification problems, the mode of the predictions from all trees is taken. For regression problems, the mean prediction across the trees is usually calculated.

Alongside prediction capabilities, Random Forests also have built-in ways of assessing the importance of different features. The algorithm can measure how much each feature contributes to decreasing the weighted impurity in a decision tree, adding up this decrease across all trees to judge the predictive power of a feature. This is a significant added value, as it provides insights into the dataset, highlighting which factors are significant contributors to the target variable.

Random Forests can utilize what are known as proximity measures to quantify how similar data points are. After the trees are grown, data points are tracked through each tree, and when they end up in the same terminal node, their proximity is increased. By the end of the process, these proximities can be normalized to fall between 0 and 1 and used for various purposes such as data clustering, outlier detection, or data imputations where missing values are inferred from the proximities to other data points.

Advantages of Random Forests in Predictive Modeling

One of the primary benefits of using Random Forests in predictive modeling is their versatility. They can be used for both classification and regression tasks, which are common in many business applications. Random Forests can handle large datasets with higher dimensionality. They can manage thousands of input variables without variable deletion, which is a significant advantage over other algorithms that struggle with feature selection.

Another strength of Random Forests is their ability to handle missing values. When faced with missing data, the algorithm can maintain accuracy by using surrogate splits. This aspect is particularly valuable in real-world scenarios where data is often imperfect and incomplete. Random Forests do not require the input of gap-filled data to provide accurate predictions.

Random Forests offer a degree of implicit feature selection. By preferring some features over others when splitting the decision trees, the algorithm indicates which features are more important in predicting the target variable. This insight can be extremely valuable in understanding the data and the problem at hand.

Random Forests offer a degree of implicit feature selection. By preferring some features over others when splitting the decision trees, the algorithm indicates which features are more important in predicting the target variable. This insight can be extremely valuable in understanding the data and the problem at hand.

Applications and Real-world Use Cases

The application scope of Random Forests is broad and impactful. In the financial sector, Random Forests are used for credit scoring and predicting stock market movements. The healthcare industry uses this algorithm for diagnosing diseases, personalizing treatment, and anticipating hospital readmissions. In the e-commerce space, Random Forests help in recommendation systems, customer segmentation, and inventory forecasting.

Take, for example, the application of Random Forests in managing customer relationships. By analyzing customer data, Random Forest can predict which customers are likely to churn. This provides businesses with the opportunity to proactively address customer issues, tailor marketing strategies, and improve customer retention.

In environmental science, Random Forests are employed to predict natural disasters, analyze the effects of climate change on ecosystems, and even assist in wildlife conservation by predicting animal movements and identifying critical habitat areas.

Challenges and Considerations

Despite its many advantages, Random Forests are not without their challenges. One of the main drawbacks is their complexity which often results in longer training times, especially when compared to simpler models. As the algorithm builds multiple trees to create the forest – the computational complexity, and subsequently, the time it takes to build the model increases in proportion to the number of trees generated.

Another area of consideration is interpretability. Decision trees are inherently interpretable, as they map out clear decision paths. Random Forests combine the output of multiple trees, which makes it more difficult to understand what is driving predictions. This can be a challenge when the model needs to be explained to stakeholders who are not data scientists, or in fields where regulatory compliance requires transparency.

While Random Forests handle overfitting better than individual decision trees, they can still be prone to this issue, especially if the trees are particularly deep. There needs to be careful tuning of the hyperparameters to ensure that the model does not overly adapt to the training data at the expense of generalization.