Recurrent Neural Networks, or RNNs, are a class of artificial neural networks designed to recognize patterns in sequences of data such as text, genomes, handwriting, or numerical time series data emanating from sensors, stock markets, and government agencies. Unlike traditional neural networks that process inputs independently, the RNN has connections that form directed cycles, allowing it to use its internal state (memory) to process sequences of inputs.

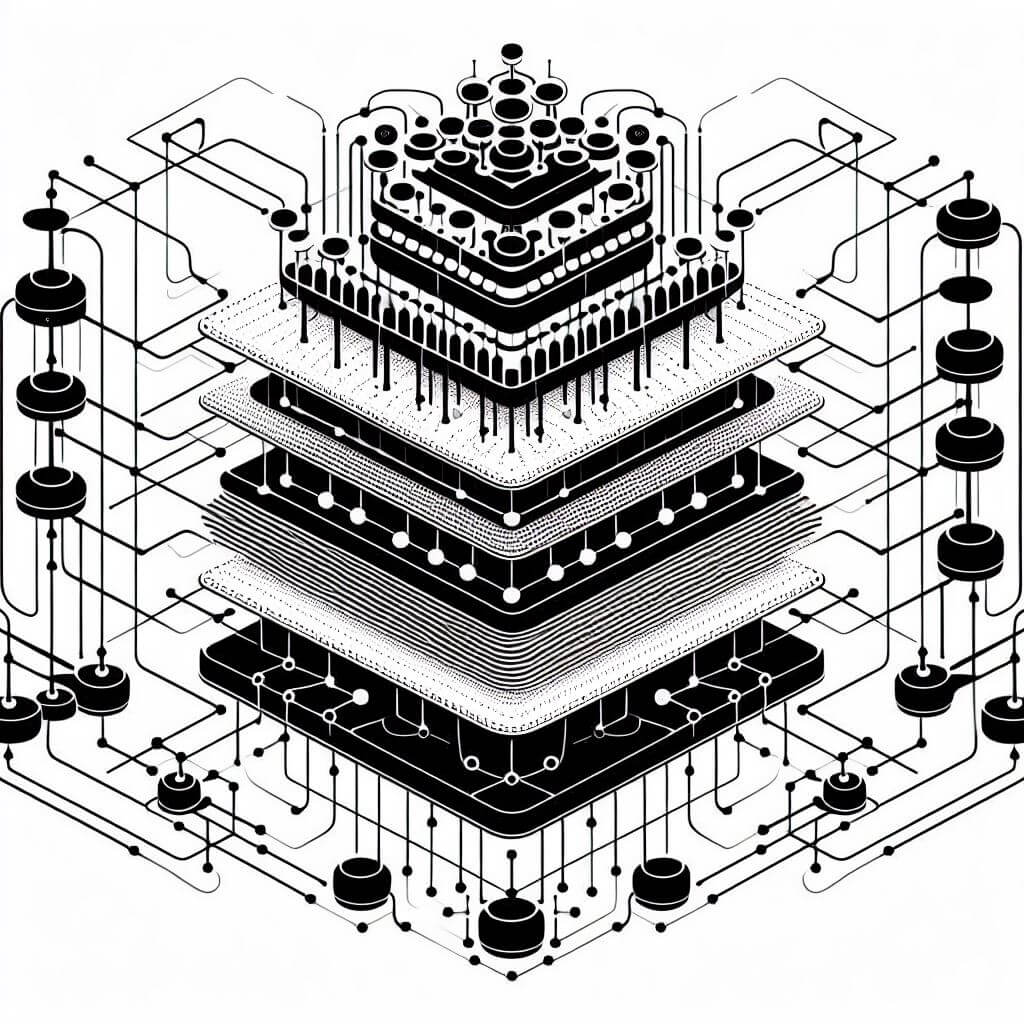

The architecture of Recurrent Neural Networks (RNNs) is a distinguishing feature that sets them apart from other neural network architectures. Unlike feedforward neural networks where the information moves in a single direction from input to output, RNNs have connections that loop back on themselves, enabling them to process input sequences of variable length and maintain internal state information.

The central concept of RNN architecture revolves around the idea of a loop of memory, where outputs from the neuron at a certain time step can influence the same neuron’s activities in the following time steps. This recurrent loop acts as memory tracks, wherein the network can store and retrieve information over different periods. This internal memory mechanism allows RNNs to form an understanding of the context within sequences, which are crucial for tasks that require knowledge about data points that appeared earlier in the sequence.

In an RNN, each node in the hidden layer looks deceptively similar to a standard neuron in a classical neural network. It has an added dimensionality due to its temporal component. It takes, as input, not just an element from the sequence being processed, but also its own previous state. This recurrent self-connection is usually represented by a loop in a diagram of the network.

In an RNN, each node in the hidden layer looks deceptively similar to a standard neuron in a classical neural network. It has an added dimensionality due to its temporal component. It takes, as input, not just an element from the sequence being processed, but also its own previous state. This recurrent self-connection is usually represented by a loop in a diagram of the network.

Each unit or cell within the hidden layer computes an output at each time step, which depends linearly on the immediate input, the recurrent input (the previous hidden state), and biases, which are then passed through a nonlinear activation function like tanh or ReLU. The output of this activation function becomes the hidden state for this time step and the input for the cells in the next time step.

To conceptualize how RNNs process sequences, imagine unrolling this loop through time. Each time step is represented as a separate node at each layer, and the recurrent connections turn into forward connections between these nodes. This representation is known as the unfolded or unrolled graph, and it helps elucidate how each piece of input data is processed through time to affect the final output.

A distinct feature of RNNs is the sharing of weights across time. Unlike in traditional feedforward neural networks where each layer has its own set of weights, the weights in an RNN’s recurrent connections are the same at each time step. This weight sharing helps in significantly reducing the number of parameters within the network, making it more manageable to train, and it also imposes a temporal consistency as the same weights process different parts of the sequence.

Training RNNs with Backpropagation Through Time

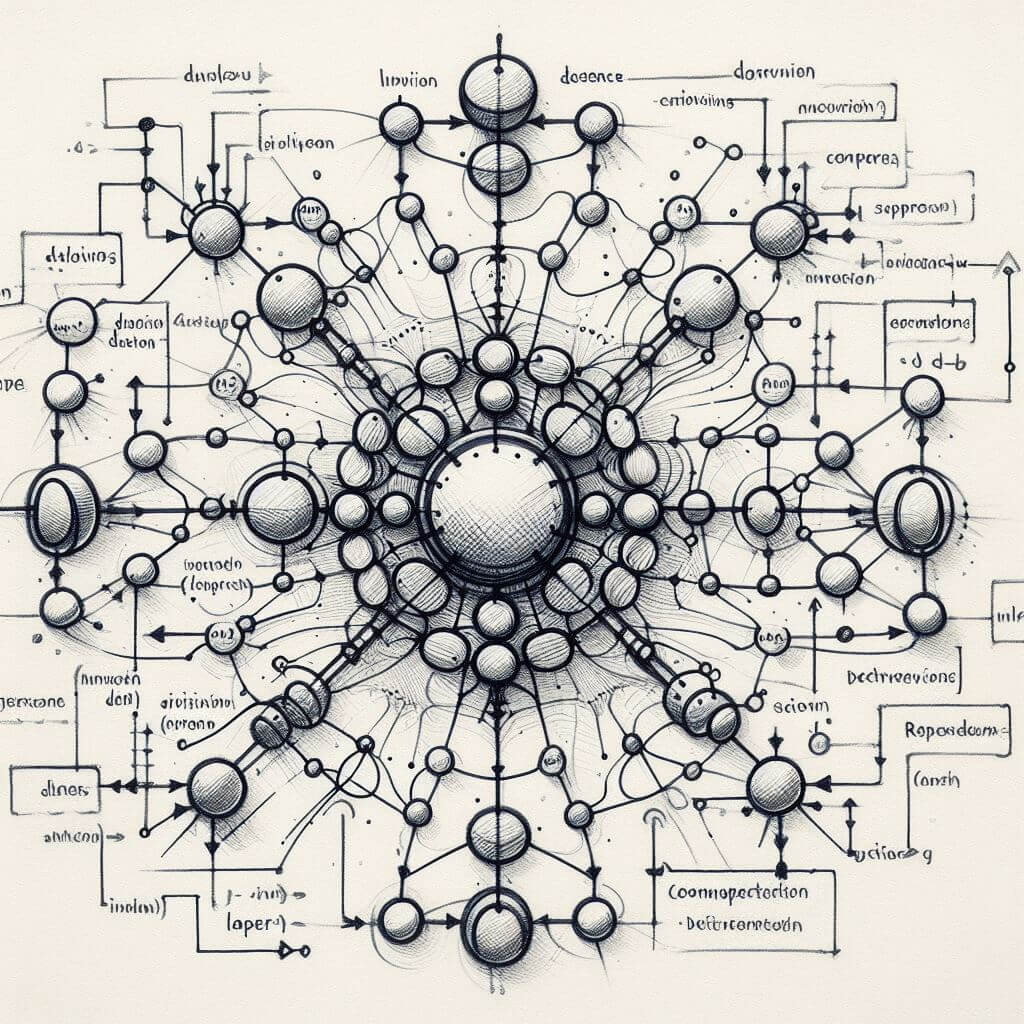

RNNs are adept at managing data where the context and sequence matter, yet training them efficiently is not without its challenges. Training a Recurrent Neural Network is more complicated than training a standard feedforward network due to the temporal sequence of the data and the recurrent nature of the connections. The fundamental technique used for training RNNs is called Backpropagation Through Time (BPTT), which is a variant of the well-known backpropagation algorithm used in feedforward neural networks, adapted to handle the sequential data that RNNs process.

The BPTT algorithm involves two primary phases: the forward pass and the backward pass. During the forward pass, the input sequence is fed into the network one time step after another, with each hidden state being influenced by the previous time step’s hidden state according to the recurrent connections. After reaching the end of the input sequence, the network’s final output is compared to the target, and an error is computed.

Once the forward pass is completed, BPTT commences the backward pass, where the error is propagated back through the network to calculate the gradients of the error with respect to the weights. These gradients are used to adjust the weights in a way that minimizes the error.

To apply backpropagation, the network must be unrolled in time, transforming it into a deep feedforward network where each layer represents a time step in the original recurrent structure. The unrolling creates a graphical structure where the temporal dependencies are laid out as connections across what resemble separate layers. The main advantage of this representation is that the standard backpropagation algorithm used for feedforward neural networks can now be applied directly.

During the backward pass, it’s important to calculate the gradients at each time step and keep track of how the output error is affected by the weights not just at the current step but at all preceding steps. This includes partial derivatives of the error with respect to the recurrent weights that influence the network across different time steps. The contribution of the error from all time steps must then be summed to update the recurrent weights.

While BPTT allows for the efficient training of temporal sequences, it’s not without its own set of challenges. The very essence of BPTT, which involves stretching out the RNN across time steps, becomes increasingly complex as the length of the input sequences grows. Longer sequences can lead to computational difficulties like the aforementioned vanishing and exploding gradients, where the gradients either diminish or grow exponentially, making the network difficult to train and often failing to capture long-term dependencies in the sequence.

To address the issue of vanishing gradients in BPTT, researchers have introduced a variety of specialized architectures such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs). These architectures implement gating mechanisms that control how much of the past data should influence the current state and future outputs, allowing the network to retain or forget information. This mitigates the vanishing gradient problem by making it easier for the model to carry information across many time steps.

To address the issue of vanishing gradients in BPTT, researchers have introduced a variety of specialized architectures such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs). These architectures implement gating mechanisms that control how much of the past data should influence the current state and future outputs, allowing the network to retain or forget information. This mitigates the vanishing gradient problem by making it easier for the model to carry information across many time steps.

Applications of RNNs

The dynamic capabilities of Recurrent Neural Networks (RNNs) to handle sequential data with context sensitivity have rendered them valuable across a wide spectrum of real-world applications. This adaptability is chiefly due to their architecture, which allows them to process information as it evolves over time, making them particularly suited for domains where data is not just a collection of individual points, but where each point is related to its predecessors and successors.

One of the most prominent application areas RNNs is in Natural Language Processing. RNNs are integral in language modeling, where they predict the likelihood of occurrence of a word based on the preceding words, an essential task for text generation and speech recognition. Language translation services are imbued with RNN capabilities, where they translate sequences of text from one language into another, considering the context to maintain the meaning and grammatical correctness. RNNs are critical in sentiment analysis, which involves understanding the sentiment conveyed by a piece of text, enabling businesses to gauge public sentiment about products or services.

RNNs have revolutionized speech recognition systems by being able to process speech over time. They transcribe audio into text by recognizing patterns in sound waves and associating them with linguistic elements over time intervals. The memory feature of RNNs is particularly useful in distinguishing between similar-sounding phonetics and understanding the context in which certain words are spoken, which is vital for accurate transcription and understanding.

In video processing, RNNs are adept at handling sequences of images (frames), allowing them to perform video classification, event detection, and subtitle generation. The network processes the frames of the video in sequence, thus capturing the action and motion context which is crucial when the analysis depends not just on what is present in individual frames but how those contents change over time.

RNNs are also employed for their predictive capabilities in time-series analysis. In financial markets, they can predict stock movements or risk trends by analyzing historical data and identifying patterns over time. Weather forecasting leverages RNNs to model and predict climatic conditions based on the sequence of collected meteorological data, where each data point is temporally dependent on the previous ones, making RNNs a fitting model for such applications.

The healthcare industry uses RNNs for analyzing sequential data such as patient vitals, ECG readings, or other time-series data from medical sensors. By recognizing patterns in the evolution of a patient’s condition, RNNs can assist in diagnosing diseases and predicting medical events like seizures or heart attacks before they occur, enabling preventative measures.

In creative domains, RNNs have been used for generating music and art. In music, with the temporal nature of the medium, RNNs can predict the next note or chord sequence by learning from existing compositions, thus creating new pieces of music. Similarly, in art, RNNs can analyze the styles of various artists and generate new artworks by learning and mimicking their style over a sequence of brush strokes.

RNNs enhance personalization in e-commerce and web services through recommendation systems. By analyzing a user’s sequence of interactions, such as the items they have viewed or purchased over time, RNNs can predict and recommend new products or content the user might be interested in.

In robotics and control systems, RNNs are utilized for tasks that require a sequence of actions or controls. Since RNNs can predict the temporal evolution of scenarios, they enhance autonomous systems like drones or self-driving cars, allowing them to make real-time decisions based on continuous sensory input over time.