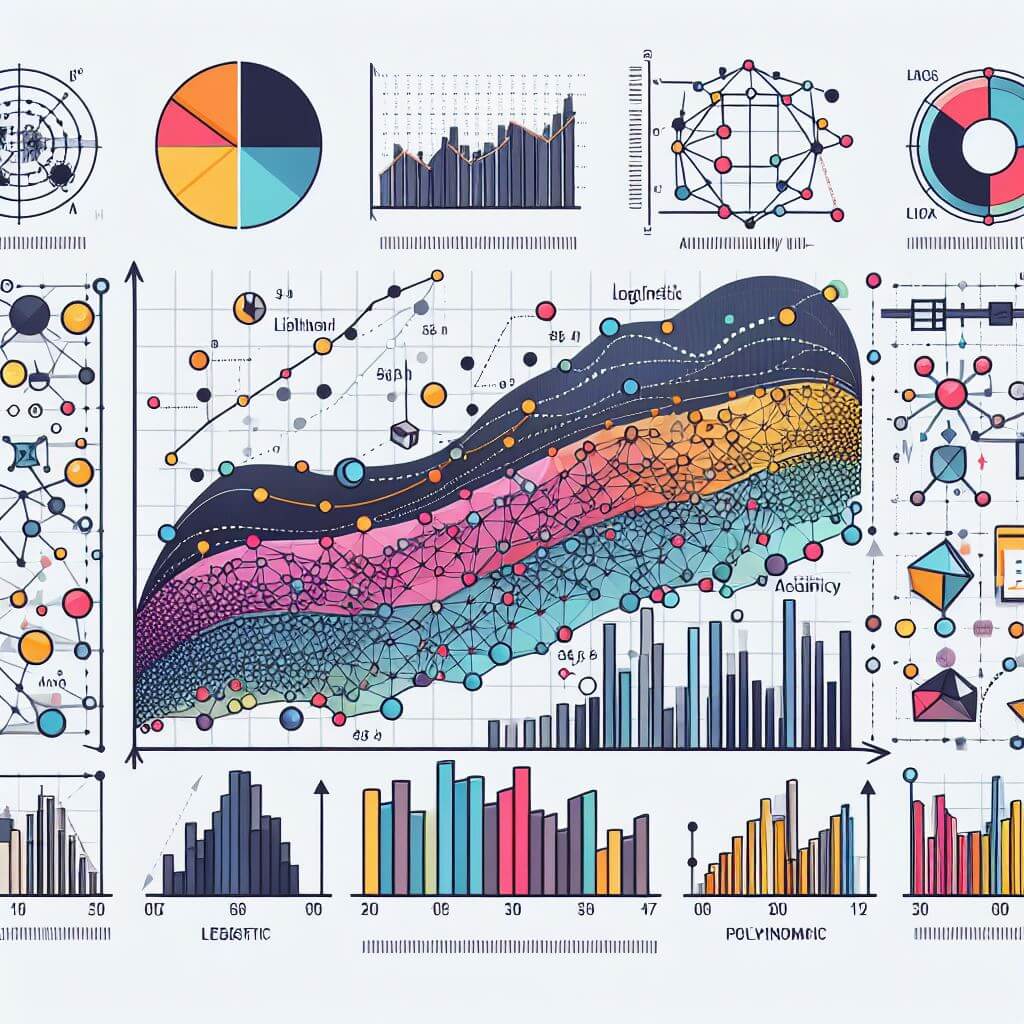

In the field of data analysis and forecasting, two methodologies stand out for their different approaches to uncovering the complexity of data sets: traditional regression analysis and regression machine learning methods. Each of these methodologies offers a unique set of tools and perspectives for solving a variety of predictive modeling challenges.

Understanding Traditional Regression Analysis

When learning the basics of traditional regression analysis, it is critical to begin by recognizing its status as a cornerstone methodology in statistics, especially for investigating and quantifying relationships between variables. Traditional regression analysis is based on a simple but profound premise: by understanding how variables interact with each other, you can make informed predictions about future outcomes. At the heart of this methodology are dependent variables, which are outcomes or responses that researchers seek to predict or explain, and independent variables, which are predictors or factors believed to influence the dependent variables.

Traditional regression mainly uses linear and logistic regression models to elucidate these relationships. Linear regression is used when the dependent variable is continuous, predicting values over an infinite range. The beauty of linear regression is its simplicity and interpretability. It assumes a linear relationship between the dependent and independent variables, allowing for the construction of a linear model that best fits the data. This modeling captures the essence of traditional regression’s appeal: inferring how a unit change in the independent variable affects the dependent variable, holding all other factors constant.

Traditional regression mainly uses linear and logistic regression models to elucidate these relationships. Linear regression is used when the dependent variable is continuous, predicting values over an infinite range. The beauty of linear regression is its simplicity and interpretability. It assumes a linear relationship between the dependent and independent variables, allowing for the construction of a linear model that best fits the data. This modeling captures the essence of traditional regression’s appeal: inferring how a unit change in the independent variable affects the dependent variable, holding all other factors constant.

Logistic regression, in contrast, is used for categorical outcomes, typically for binary outcomes. It models the probability that a given input belongs to a certain category, thus providing a framework for classification tasks in a regression context. This distinction highlights the flexibility of traditional regression in handling different types of results while maintaining its basic principles.

A critical aspect of traditional regression analysis is its reliance on certain assumptions about the data, including linearity, normality, independence, and equal variance (homoscedasticity) across residuals. Adherence to these assumptions is of primary importance for the reliability of the conclusions of the regression model. Researchers should carefully test these assumptions and consider transformation or alternative models if the assumptions are violated.

Despite its elegance and power, traditional regression is not without its challenges. One of the main limitations is its difficulty in handling nonlinear relationships unless they are explicitly modeled or transformed in some way. Additionally, traditional regression models can become unwieldy when dealing with large numbers of predictor variables, potentially leading to overfitting when the model captures noise rather than the underlying relationship.

The ability to interpret traditional regression models is a significant advantage, offering a clear understanding of how variables are related. This makes traditional regression extremely valuable, especially in industries where understanding the direction and strength of relationships is as important as the accuracy of the prediction itself. For example, in economics, health care, and the social sciences, traditional regression techniques provide robust tools for hypothesis testing, allowing researchers to make meaningful inferences about the factors that influence various outcomes.

Emergence of Regression Methods of Machine Learning

Machine learning is about allowing machines to learn from data, identify patterns, and make decisions with minimal human intervention. In this broad field, regression techniques focus specifically on predicting continuous outcomes, using algorithms that can adapt and improve over time. The range of algorithms under the machine learning umbrella is large and varied, including but not limited to decision trees, which model decisions and their possible consequences as branches; random forests, an ensemble method that combines multiple decision trees to improve accuracy; gradient boosting machines that iteratively add models to minimize errors; and neural networks inspired by the architecture of the human brain can capture deep nonlinear connections through layers of interconnected nodes.

What distinguishes machine learning regression is its ability to automatically discover the representations needed for detection or classification directly from the raw data. This means that instead of requiring a human analyst to manually select and transform features that apply to a predictive model—a process that risks overlooking complex interactions between variables—machine learning algorithms can automatically identify and combine features in a way that best contributes to forecast accuracy. This ability to handle feature development is especially important in the context of large data where traditional models fail.

Machine learning models thrive on data. The more data these algorithms get, the more accurate and precise their predictions become. This characteristic makes them particularly suitable for today’s data-rich environments, where they can constantly evolve and adapt as new data becomes available. This is a sharp departure from traditional models, which inherently do not improve with additional data and may require complete re-specification to adapt to new information.

Advanced Methodology And Application

By delving into traditional regression and regression machine learning techniques, it becomes clear how each has been developed to suit different types of analytical queries and data landscapes. Traditional regression analysis uses a structured methodology based on statistical theory. This approach often begins with the formulation of a hypothesis that seeks to investigate specific relationships between variables. Researchers then choose an appropriate model based on these hypotheses—linear or logistic regression models are preferred. The process continues with the estimation of model parameters, often using methods such as ordinary least squares (OLS) for linear regression. Importantly, traditional regression methodologies place considerable emphasis on testing assumptions such as linearity, independence, and homoscedasticity. Rigor in meeting these assumptions ensures the interpretability and reliability of the conclusions drawn from the model.

At the other end of the spectrum, regression machine learning techniques take a more flexible and data-driven approach. The methodology here is iterative and adaptive, focusing on model selection based on predictive performance rather than strict adherence to underlying assumptions about the data distribution. This process often involves lengthy pre-processing of the data, such as dealing with missing values, normalizing the data, and converting categorical data into a format that algorithms can understand. At the heart of the machine learning methodology is the training process, in which models learn to predict outputs based on inputs, adjusting their parameters to minimize prediction error. This learning process is driven by cross-validation techniques to optimize model performance and prevent overfitting, showing a clear focus on achieving high prediction accuracy over interpretation.

The applicability of these methodologies varies considerably in different fields. Traditional regression finds its footing in areas where the goal is not just to predict, but to understand cause-and-effect relationships between variables. Fields such as economics, psychology, and epidemiology, where it is critical to quantify the effect of independent variables on the dependent variable, often rely on traditional regression analysis. Machine learning regression, with its ability to process complex multivariate data and identify patterns that are not readily apparent to analysts, has found widespread use in fields such as finance, where forecasting stock prices requires analyzing vast amounts of market data, or in weather forecasting, where models must consider numerous variables to accurately predict outcomes.