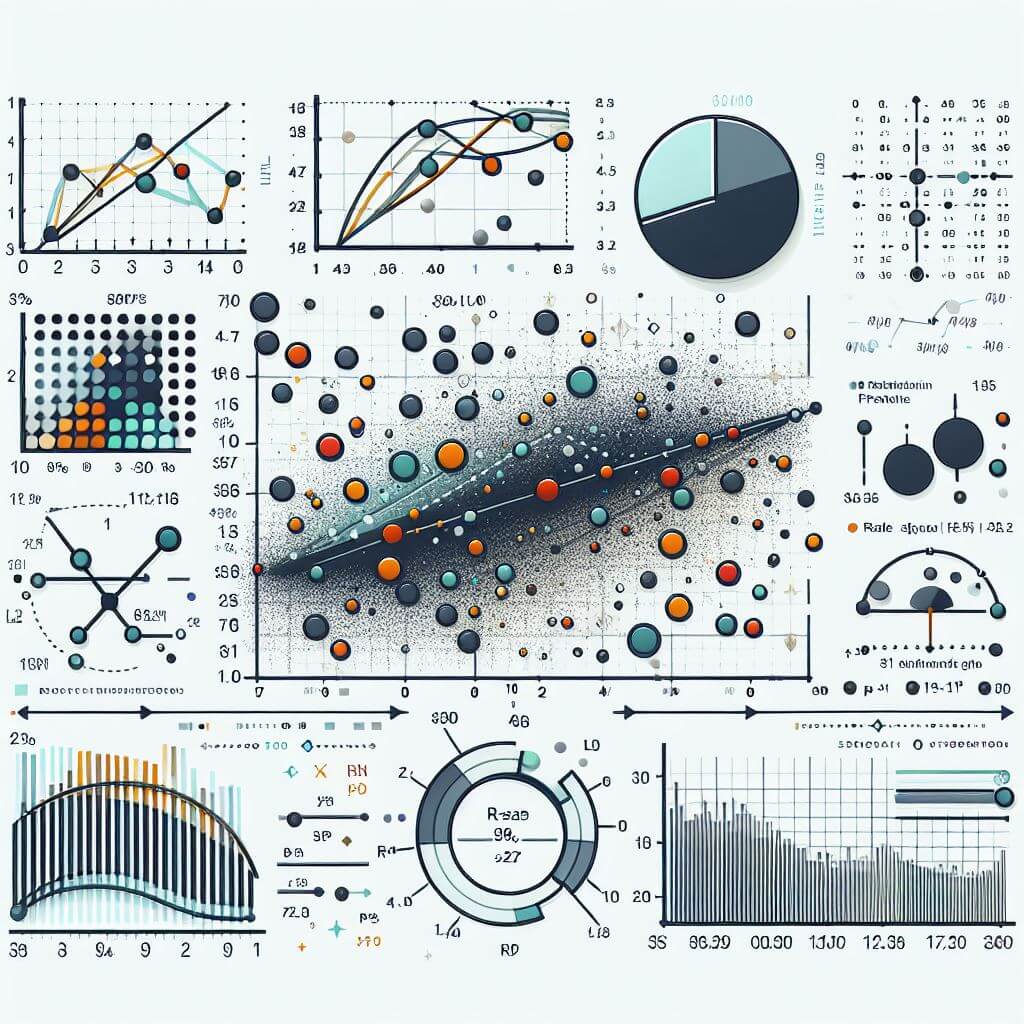

Regression analysis has been a staple in the domain of statistics for decades, providing a way for analysts and researchers to understand relationships between variables and predict outcomes. At the heart of regression analysis is a quest to model the connection between an independent variable, or predictor, and a dependent variable, or outcome. This relationship is quantified in a way that can be used to forecast future values of the dependent variable based on new observations of the independent variable(s). With the onset of the digital age, regression techniques have undergone significant evolution, branching into traditional statistical regression methods and machine learning-based regression techniques, each with its advantages and contexts of use.

Traditional Regression Analysis Simplified

Traditional regression techniques form the backbone of statistical analysis, tracing back to the origins of statistical inquiry. Fundamental to these methods is the concept of establishing a relationship between one or more independent variables, which are predictors, and a dependent variable, which is often the outcome or the response we are interested in predicting or explaining. At the core of these techniques is the classic linear regression model, which posits a directly proportional relationship between the dependent and independent variables. This proportional relationship is characterized by the equation of a straight line, defined by its slope and intercept, which represents the expected change in the outcome variable for a one-unit change in the predictor.

These traditional techniques revolve around simplicity and mathematical tractability, where the primary goal is to develop a model that can straightforwardly explain the data. To achieve this, least squares estimation is typically used, which facilitates the calculation of the best-fitting line by minimizing the sum of the squares of the vertical distances of the points from the line (residuals). This methodology provides a clear and quantifiable way to measure the accuracy of the predictions made by the model.

These traditional techniques revolve around simplicity and mathematical tractability, where the primary goal is to develop a model that can straightforwardly explain the data. To achieve this, least squares estimation is typically used, which facilitates the calculation of the best-fitting line by minimizing the sum of the squares of the vertical distances of the points from the line (residuals). This methodology provides a clear and quantifiable way to measure the accuracy of the predictions made by the model.

Beyond simplicity, one of the significant advantages of traditional regression methods is their interpretability. When a multiple linear regression is run, which incorporates two or more predictors, the model assigns a coefficient to each independent variable. These coefficients are easily interpreted as the marginal effect of each variable, holding other factors constant. This interpretability extends to hypothesis testing frameworks, where researchers can assess the statistical significance of each coefficient, providing insights into which variables have a meaningful impact on the dependent variable.

Traditional regression is not without its limitations. These methods hinge on certain assumptions about the data, such as linearity, independence of errors, homoscedasticity (constant variance of residuals), normal distribution of error terms, absence of multicollinearity (high correlation between independent variables), and the absence of influential outliers. When these assumptions fail to hold, which is not uncommon in real-world data, the models can produce biased, inconsistent, or inefficient estimates.

Despite these limitations, traditional regression models still offer a robust starting point and are well-dignified for tasks that require straightforward modeling approaches. They are particularly valuable in settings where the research question revolves around understanding causal relationships or when the structure of the data conforms well to the assumptions underlying these methods. For instance, in fields like social sciences and economics, linear regression supports understanding and testing theories about how changes in one factor may lead to changes in another.

The Machine Learning Regression Paradigm

At the forefront of ML regression techniques are decision trees, which partition the data into subsets based on diverse criteria. Each decision within these trees represents a branching that converges on a prediction, elegantly capturing non-linear relationships. Building on decision trees, ensemble methods like random forests combine multiple trees to improve predictive accuracy and robustness, mitigating the risk of overfitting associated with individual trees. Another ensemble method, gradient boosting machines, sequentially builds trees where each tree tries to correct the errors of the previous one, often leading to higher predictive performance.

Support vector regression (SVR) offers yet another approach. It functions by finding the optimal hyperplane that best segregates the data points in a multi-dimensional space, which can be adjusted to capture non-linear relationships using different kernels. Neural networks, inspired by biological neural networks, comprise layers of interconnected nodes that mimic neurons. These networks can model highly intricate patterns in data, making them particularly powerful for tasks like image and speech recognition, where the relationships between inputs and outputs are complex and highly non-linear.

Central to almost all machine learning algorithms is their capacity for feature learning. In ML, the concept of feature engineering entails the creation or transformation of input variables to improve model outcomes. Some machine learning models, particularly neural networks, are capable of automatic feature learning, meaning they can intrinsically develop representations that are predictive without explicit guiding instructions.

The strength of machine learning regression is not just its ability to handle large data sets or complex relationships, but also its adaptability to various data types. Unlike traditional methods that require numerical inputs, ML techniques can work with images, text, and other forms of unstructured data through advanced feature extraction methods like convolution and word embeddings. This versatility is essential in the modern landscape of data analytics, as the range of data types and sources continues to expand.

Machine learning’s adaptability comes at the cost of opacity. The internal workings of complex ML models, such as deep learning networks, can be difficult to interpret, leading to the well-known “black box” problem. This lack of clarity can have implications for trust and accountability, making it challenging to use such methods in fields where interpretability is as vital as accuracy, such as healthcare and judicial decision-making.

Balancing Interpretability with Predictive Power

The choice between traditional regression and machine learning regression techniques often boils down to the trade-off between interpretability and predictive power. Traditional methods, with their straightforward equations and clear statistical significance tests, are unbeatable when it comes to understanding the structure of the data and the relationships within. They excel in situations where explaining the why behind predictions is crucial, such as in policy-making scenarios or when results need to stand up in a court of law.

On the flip side, machine learning techniques shine in making predictions where the complexity of the data surpasses the capacity of traditional methods. Their ability to learn from the data without imposing rigid assumptions makes them powerful tools for scenarios with massive datasets, like in the fields of genomics or image recognition.

The decision regarding which approach to use often depends on the nature of the problem, the quality and quantity of data available, and the ultimate goal of the analysis, be it prediction or interpretation. In practice, analysts may start with traditional methods for initial understanding and graduate to machine learning techniques for refining predictions and dealing with more complex datasets.